charm AT lists.cs.illinois.edu

Subject: Charm++ parallel programming system

List archive

- From: "Van Der Wijngaart, Rob F" <rob.f.van.der.wijngaart AT intel.com>

- To: "Chandrasekar, Kavitha" <kchndrs2 AT illinois.edu>, "White, Samuel T" <white67 AT illinois.edu>

- Cc: Phil Miller <unmobile AT gmail.com>, "Totoni, Ehsan" <ehsan.totoni AT intel.com>, "Langer, Akhil" <akhil.langer AT intel.com>, "Harshitha Menon" <harshitha.menon AT gmail.com>, "charm AT cs.uiuc.edu" <charm AT cs.uiuc.edu>

- Subject: RE: [charm] When to migrate

- Date: Tue, 6 Dec 2016 22:51:20 +0000

- Accept-language: en-US

|

Hi all,

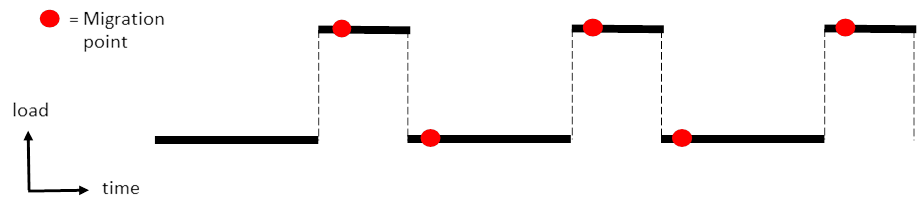

I have been running my code with the placement of the AMPI_Migrate calls as indicated in the figure below. I delay the time of that call between one and five time steps after the load changes, but I see zero effect on performance (this is on a shared memory system, using isomalloc, so the migration does not require serious data transfer). I would expect that with a delay of zero time steps the runtime has no information yet about the changed load, so does not know how to migrate. With one step it knows something, and with a couple more even more. Delaying much longer is no good, because the load will have changed without effective migration executed. So I would expect an initial increase in performance with increasing delay, and subsequently performance decrease if I delay migration even more. The data is noisy, but it is clear migration delay has negligible effect. I have two questions: 1. How does the runtime actually collect data about load balance? Is it continuous, or only when AMPI_Migrate is called? If the latter, then I’m in trouble, because the runtime would not be utilizing the migration delay to learn more about the load balance. 2. How can I make effective use (if any) of start/stop measurement calls? Should they be used to demarcate a few time steps before a load change occurs, so the runtime can learn? Thanks!

Rob

From: Van Der Wijngaart, Rob F

Hi Kavitha,

One last question. If I have an AMPI code that I have linked with commonLB and that I run with a valid +balancer argument, but I do not call AMPI_Migrate anywhere in the code, does the runtime still collect load balance information? If so, is there a charmrun command that I can give that prevents the runtime from doing that? I know I could use these functions: AMPI_Load_stop_measure(void) AMPI_Load_start_measure(void) but I prefer to specify it on the command line (otherwise I need to supply yet another input parameter and parse it in the code). Thanks!

Rob From: Van Der Wijngaart, Rob F

Below is to illustrate how I understood where I should place the AMPI_Migrate calls in my simulation. Is this correct? Thanks!

Rob

From: Van Der Wijngaart, Rob F

Thanks, Kavitha, but now I am a little confused. How should I use AMPI_Load_start/stop_measure in conjunction with AMPI+Migrate? Are they only used with the Metabalancer, or also with the fixed balancers? In my case changes in load occur only at discrete points in time; they do not grow or shrink continually. So migration, if done at all, should be done at one of these discrete points, or it will have no effect. If I am supposed to bracket those points with calls to AMPI_Load_start/stop_measure, would the sequence of calls would be something like this: AMPI_Load_start, wait a few time steps for the load to change, AMPI_Migrate, wait a few more time steps, AMPI_Load_stop_measure? Thanks!

Rob

From: Chandrasekar, Kavitha [mailto:kchndrs2 AT illinois.edu]

When using load balancers without Metabalancer, it is sufficient to make the AMPI_Migrate calls when the imbalance appears. Phil pointed out a couple of things regarding this:

1. The AMPI_Migrate calls would need to be placed a few time steps after the imbalance appears, since load imbalance in the ranks would be known to the load balancing framework only at the end of the time step

2. The information supplied to the load balancing framework would be more accurate, if the LB instrumentation is turned off to start with and turned on a few time steps before the load imbalance appears. This can be repeated each time load imbalance occurs. The calls to turn instrumentation off and on are: AMPI_Load_stop_measure(void) AMPI_Load_start_measure(void)

A clarification regarding use of +MetaLB - the option needs to be specified alongside the +balancer <loadbalancer> option.

Thanks, Kavitha

From: Van Der Wijngaart, Rob F [rob.f.van.der.wijngaart AT intel.com] OK, thanks, Kavitha, I’ll do that. Should I apply this method to all load balancers, or only to MetaLB?

Rob

From: Chandrasekar, Kavitha [mailto:kchndrs2 AT illinois.edu]

It would be useful to call AMPI_Migrate every few time steps. The load statistics collection happens at the AMPI_Migrate calls. If there is observed load imbalance, which I understand would be when the refinement appears and disappears, then Metabalancer would calculate the load balancing period based on historical data. So, it would be useful to call it more often than only at time step with the load imbalance.

Thanks, Kavitha

From: Van Der Wijngaart, Rob F [rob.f.van.der.wijngaart AT intel.com] Hi Kavitha,

After a lot of debugging and switching to the 6.7.1 development version (that fixed the string problem, as you and Sam noted), I can now run my Adaptive MPI code consistently and without fail, both with and without explicit PUP routines (currently on a shared memory system). I haven’t tried the meta load balancer yet, but will do so shortly. I did want to share the structure of my code with you, to make sure I am and will be doing the right thing. This is an Adaptive Mesh Refinement code, in which I intermittently add a new discretization grid (a refinement) to the original grid (AKA background grid). I do this in a very controlled fashion, where I exactly specify the interval (in number of time steps) at which the refinement appears, and how long it is present. This is a cyclical process. Obviously, the amount of work goes up (for some ranks) when a refinement appears, and goes down again when it disappears. Right now I place an AMPI_Migrate call each time a refinement has just appeared, and when it has just disappeared. So each time I call it something has changed. I have a number of parameters that I vary in my current test suite, including the over-decomposition factor, and the load balancing policy (RefineLB, RefineSwapLB, RefineCommLB, GreedyLB, and GreedyCommLB). I will add MetaLB to that in my next round of tests. My question is if my approach for when to call AMPI_Migrate is correct. Simply put, I only call AMPI_Migrate when the work structure (work assignment to ranks) has changed, and not otherwise. What do you think, should I call it every time step? Note that calling it every so many time steps without regard for when the refinement appears and disappears wouldn’t make much sense. I’d be sampling the workload distribution at a frequency unrelated to the refinement frequency. Thanks in advance!

Rob

From: Chandrasekar, Kavitha [mailto:kchndrs2 AT illinois.edu]

The meta-balancer capability to decide when to invoke a load balancer is available with the +MetaLB command line argument. It relies on the AMPI_Migrate calls to collect statistics to decide when to invoke the load balancer. However, in the current release, there is a bug in AMPI_Migrate's string handling, so it might not work correctly.

The meta-balancer capability to select the optimal load balancing strategy is expected to be merged to mainline charm in the near future. I will update the manual to include the usage of meta-balancer.

Thanks, Kavitha From:

samt.white AT gmail.com [samt.white AT gmail.com] on behalf of Sam White [white67 AT illinois.edu] Yes, Kavitha will respond on Metabalancer. MPI_Comm's are int's in AMPI. We should really have APIs in our C and Fortran PUP interfaces to hide these details from users, so thanks for pointing it out.

On Tue, Nov 22, 2016 at 3:01 PM, Van Der Wijngaart, Rob F <rob.f.van.der.wijngaart AT intel.com> wrote:

|

Attachment:

image001.emz

Description: image001.emz

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/02/2016

- RE: [charm] When to migrate, Chandrasekar, Kavitha, 12/02/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/02/2016

- RE: [charm] When to migrate, Chandrasekar, Kavitha, 12/02/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/02/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/02/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/05/2016

- Re: [charm] When to migrate, Phil Miller, 12/05/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/05/2016

- Re: [charm] When to migrate, Phil Miller, 12/05/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/06/2016

- Message not available

- Message not available

- Message not available

- Message not available

- Message not available

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/21/2016

- Message not available

- Message not available

- RE: [charm] When to migrate, Chandrasekar, Kavitha, 12/02/2016

- Message not available

- Message not available

- Message not available

- Message not available

- Message not available

- Message not available

- Re: [charm] When to migrate, Sam White, 12/21/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/21/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/22/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/22/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/22/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/22/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/23/2016

- Message not available

- Re: [charm] When to migrate, Sam White, 12/23/2016

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/23/2016

- Message not available

- RE: [charm] When to migrate, Van Der Wijngaart, Rob F, 12/02/2016

- RE: [charm] When to migrate, Chandrasekar, Kavitha, 12/02/2016

Archive powered by MHonArc 2.6.19.